Meta-Learning Approaches for Improving Detection of Unseen Speech Deepfakes

IEEE Spoken Language Technology Workshop (SLT) 2024, Macau, China

Problem Statement

- Deepfake detection systems struggle to generalize to unseen attacks

- No access to the latest TTS/VC generators

- Adapt to new attack/domain with few available samples

- Decrease chances for zero-day (unknown) attack

Take Home Message

- ProtoMAML and ProtoNET adapt to new attacks/domains with limited number of samples

- ProtoMAML demonstrates superior adaptability compared to ProtoNet, but demands higher computational resources

- Few-Shot Adaptation keeps system up-to-date to new deepfakes

Solutions

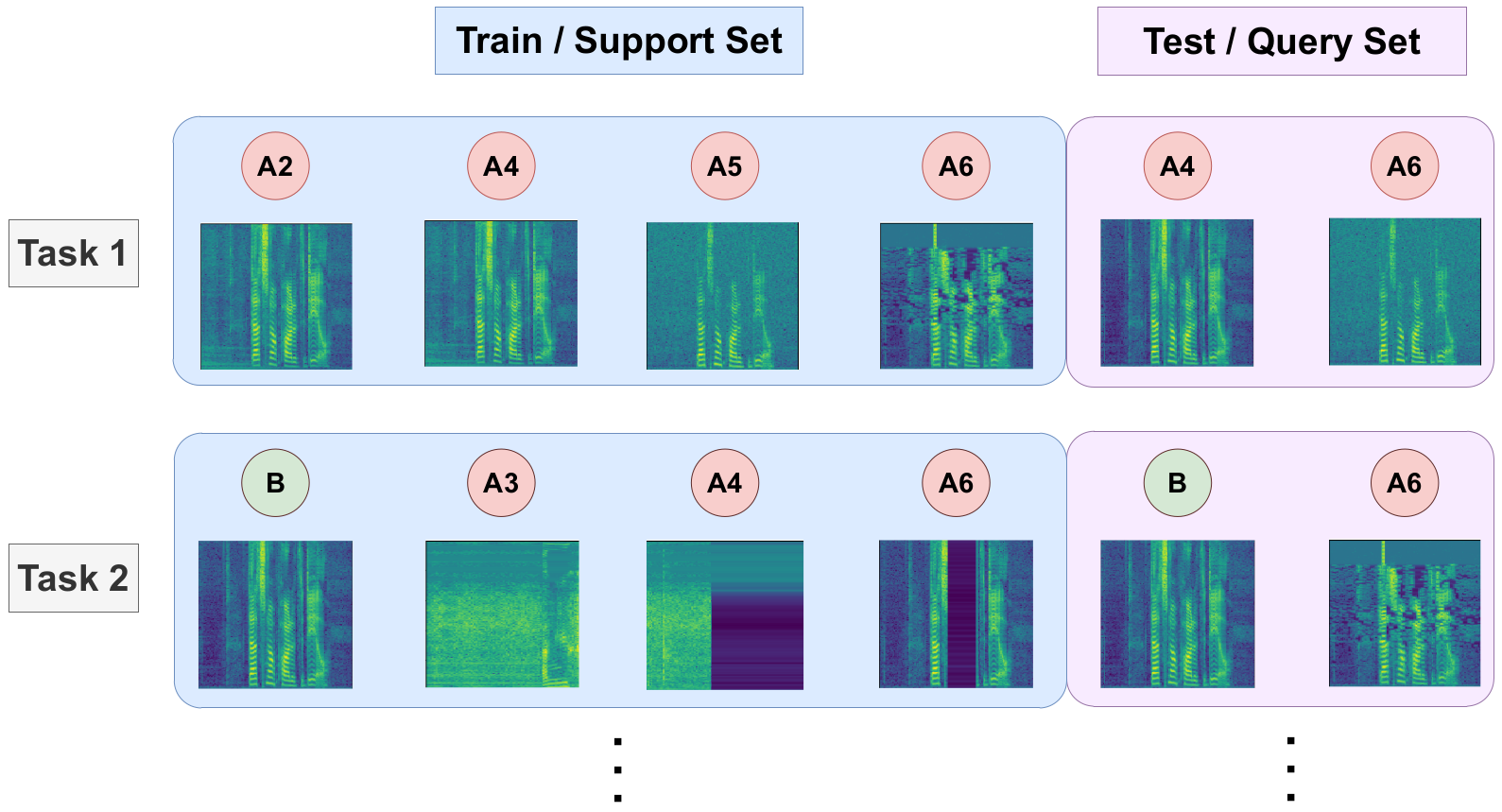

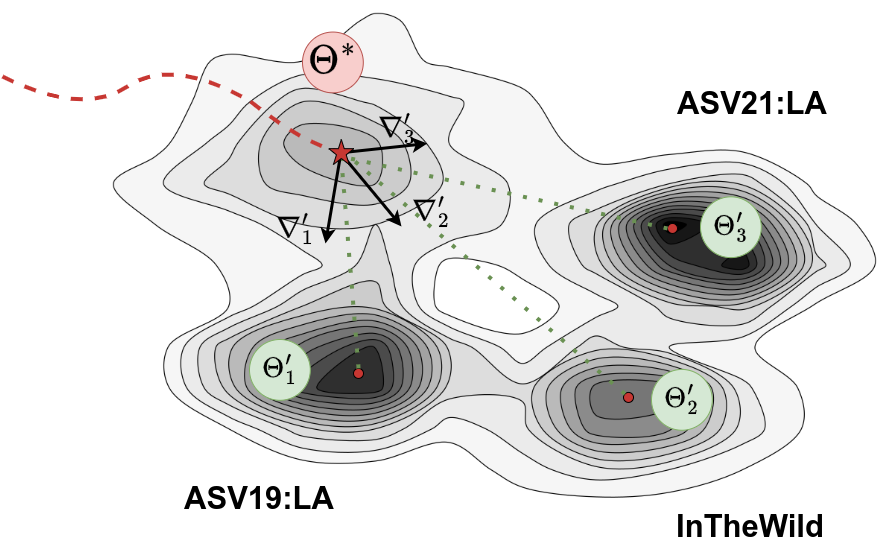

Meta Learning

- Meta-Training - learn generalized parameters on multiple tasks

- Adaptation - few-shot learning for unseen deepfakes

- Evaluation - testing on query samples to assess adaptation

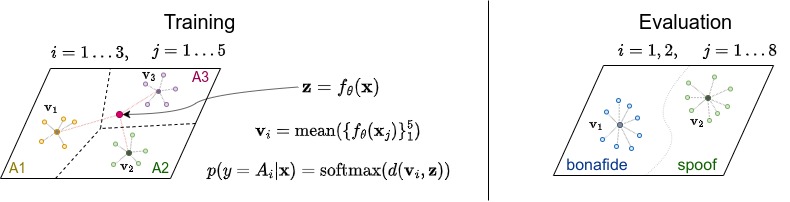

A. Prototypical Network - ProtoNet

Train Prototype Representation

Evaluation: Nearest to a Prototype

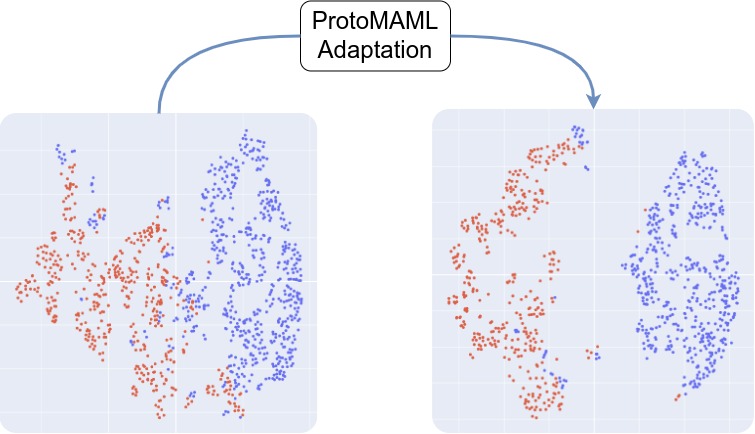

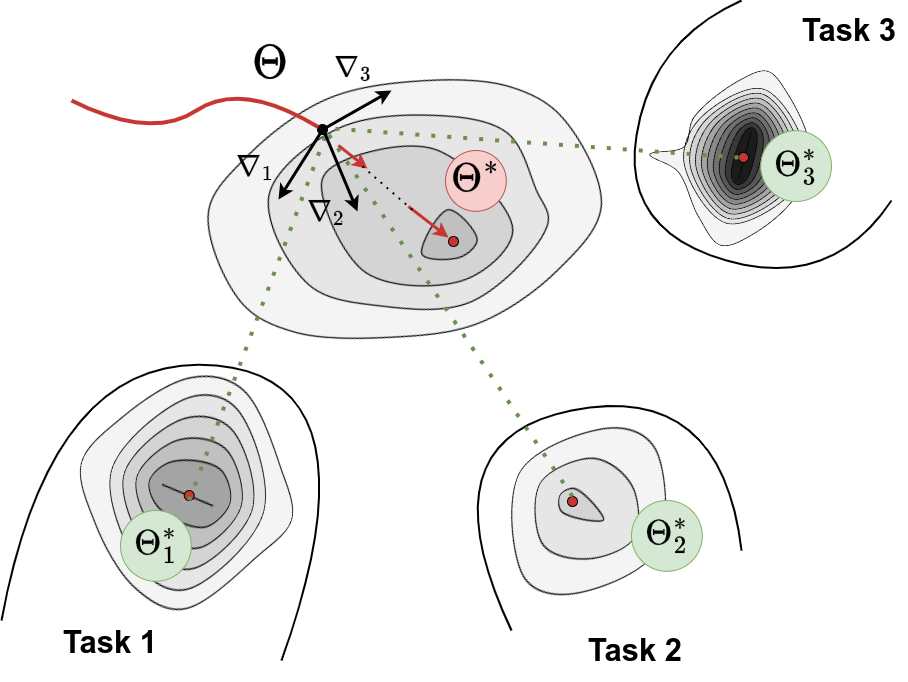

B. Optimization-based adaptation - ProtoMAML

Train: Optimize for Each Task

Evaluation: Adapt and Test on Query

Results

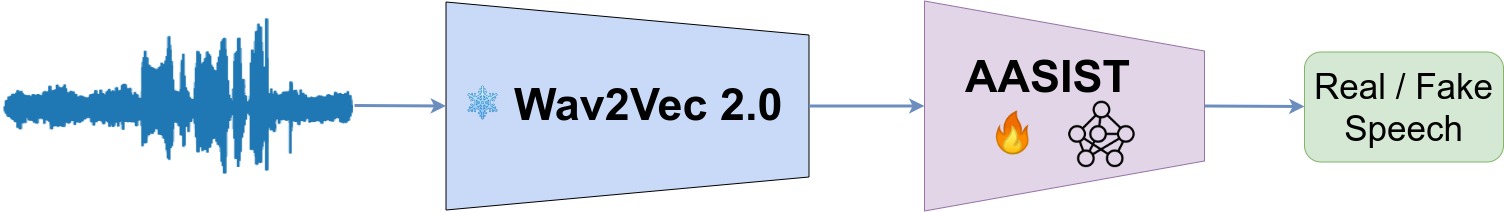

Baseline architecture

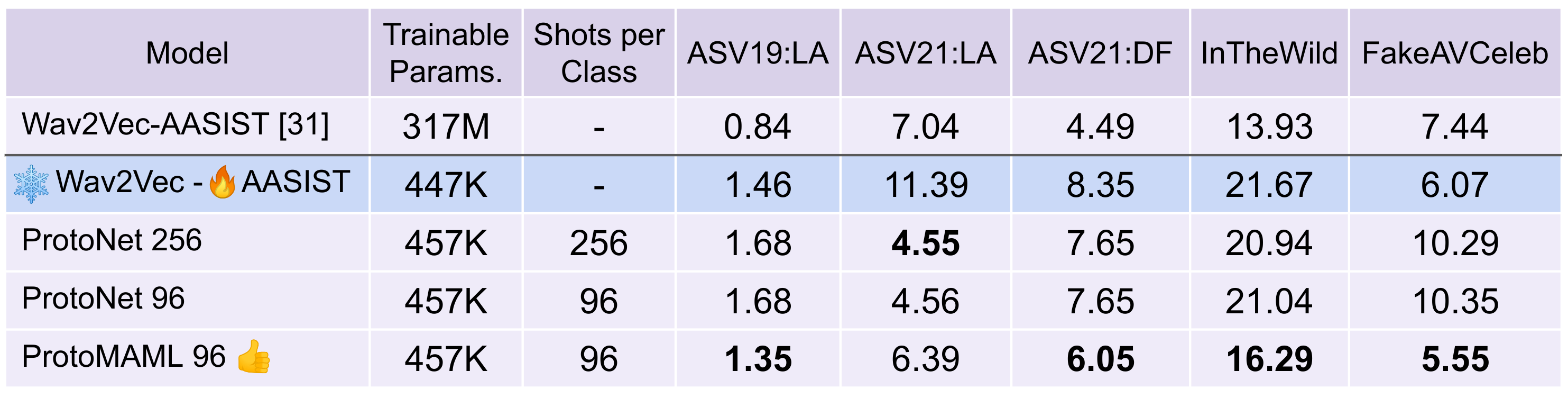

Summary. Baseline / ProtoNET / ProtoMAML. EER, %

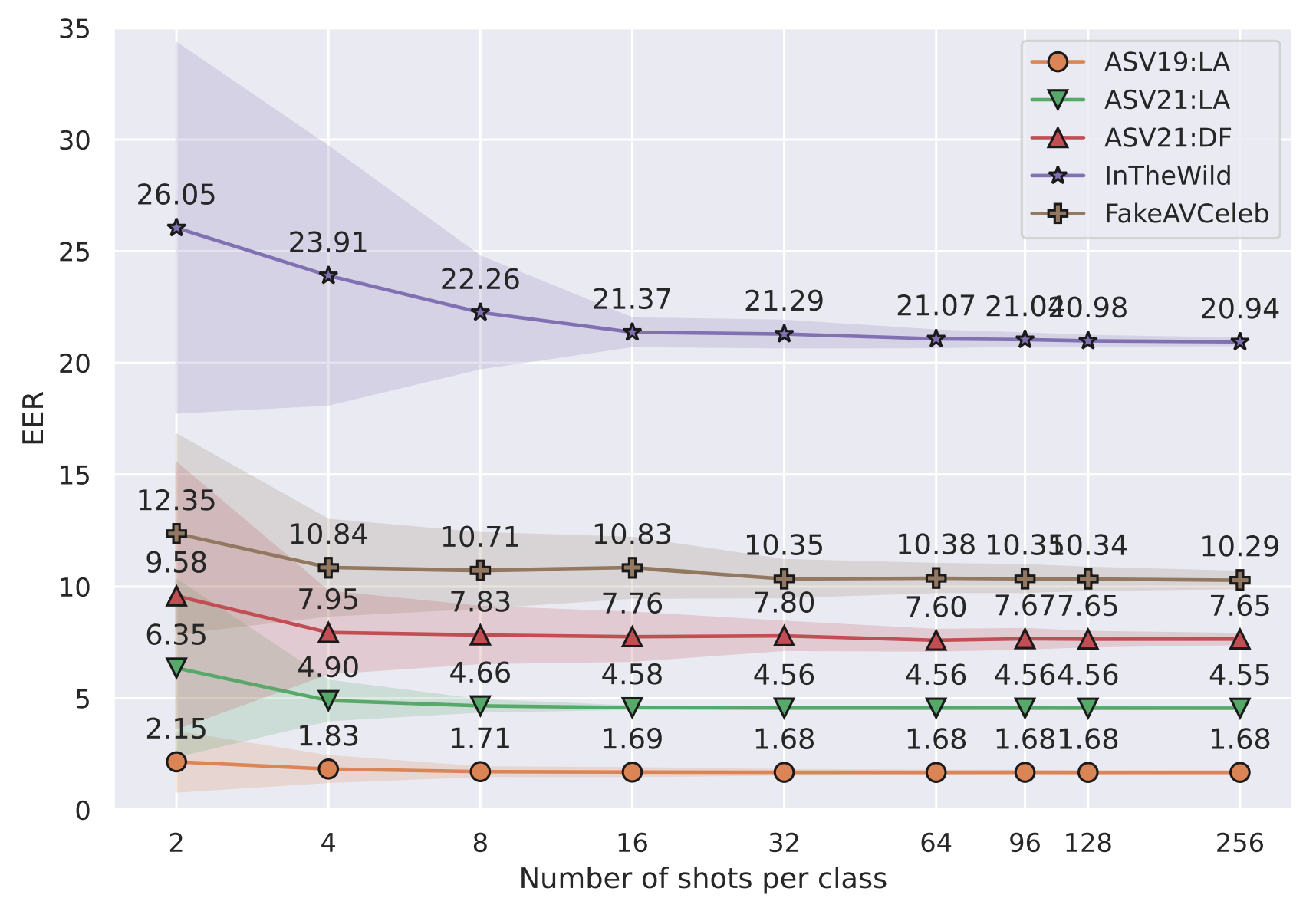

ProtoNet Adaptation with 2 - 256 Shots

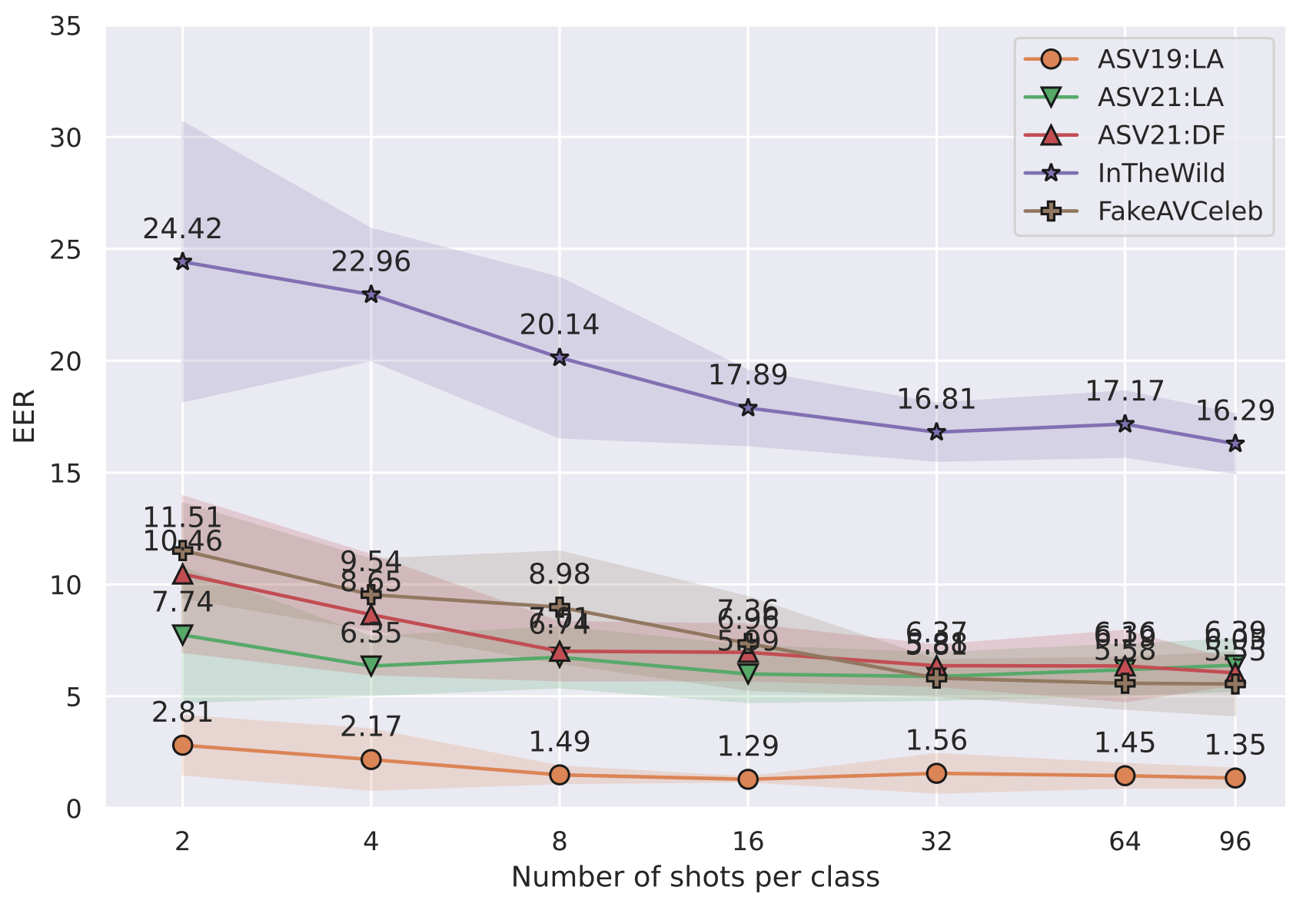

ProtoMAML Adaptation with 2 - 96 Shots

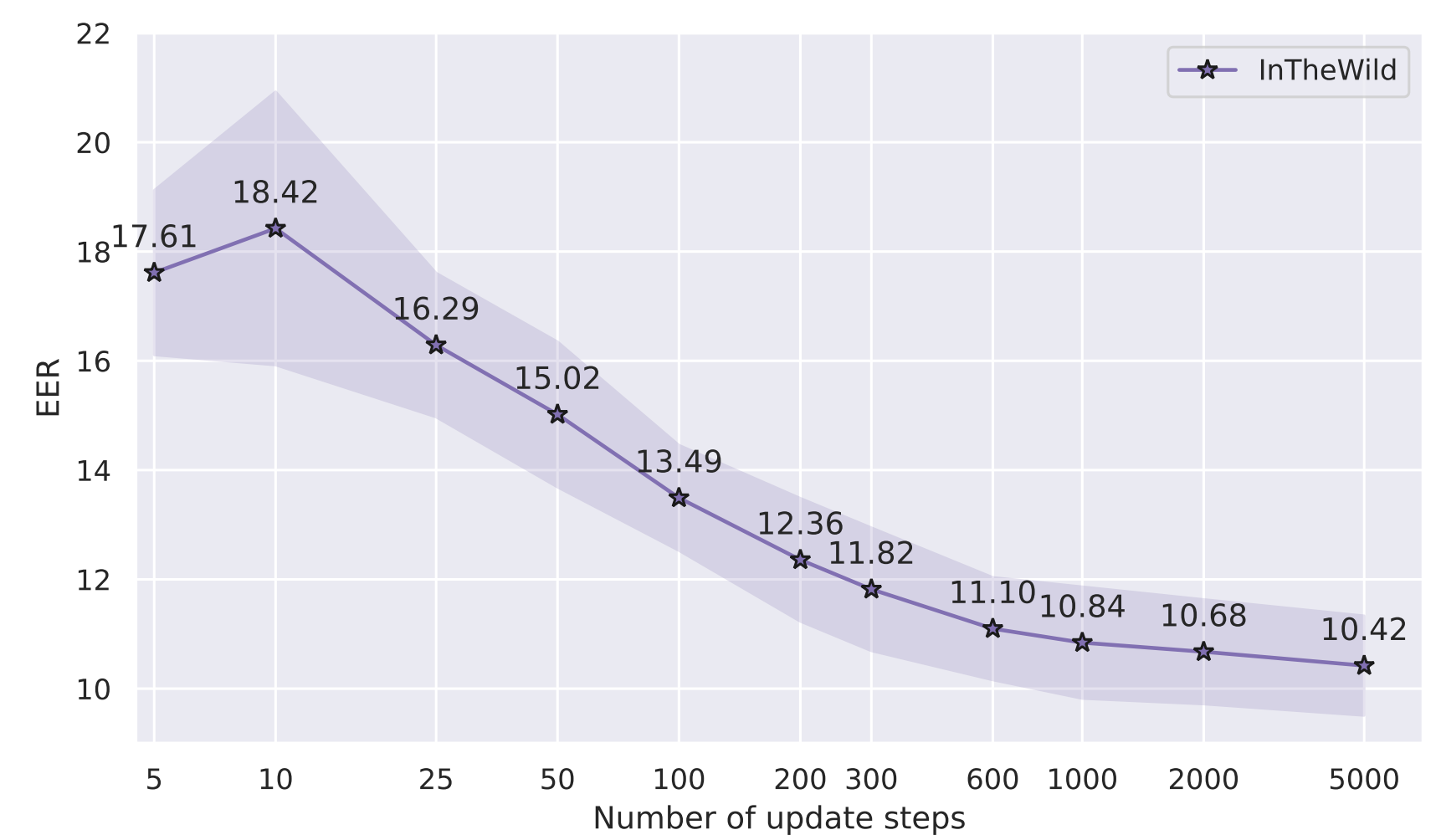

ProtoMAML: Effect of Adaptation Steps

Recommendations

- Use Meta-learning for continuous adaptation and keep deepfake detection systems up-to-date

- Use ProtoNet when have resource constraints

- Use ProtoMAML for more accurate adaptation

Conclusions

- Adapting a pre-trained model using a small number of samples from a new attack/domain significantly enhance detection performance

- For example, on out of domain InTheWild dataset the EER is improved from 21.67% to 10.42% using only 96 samples from the unseen dataset

- ProtoMAML outperforms the non-parametric ProtoNet

BibTex

@article{MetalearningDeepfake2024,

title={Meta-Learning Approaches for Improving Detection of Unseen Speech Deepfakes},

author={Ivan Kukanov and Janne Laakkonen and Tomi Kinnunen and Ville Hautamäki},

year={2024},

eprint={2410.20578},

archivePrefix={arXiv},

primaryClass={eess.AS},

url={https://arxiv.org/abs/2410.20578}

}